Keywords:

participatory research, makerspaces

Tudor B. Ionescu and Jesse de Pagter

Complement: EU automation policy: Towards ethical, human-centered, trustworthy robots? [pdf]

1. Introduction

Recent debates in peer production research (Smith et al., 2020) point to the resurgence of an automation discourse that calls on information and computer technologies to boost the productivity and competitiveness of European production facilities. The latest version of this discourse, known as “Industry 4.0” (Kagermann et al., 2013), envisions a distributed, interconnected, highly automated factory. Although the notion of a Fourth Industrial Revolution did not trigger significant changes in the traditionally conservative European manufacturing domain, the vision of a distributed, interconnected factory seems to have imperceptibly conquered other aspects of economic and social life, notably through the so-called “gig economy” (Woodcock & Graham, 2019) and a new wave of rationalization of the public and private sectors through digitization. In this context, peer production researchers have asked whether “a socially-constructive approach to technology” can provide a viable alternative to the “depopulated vision of Industry 4.0” (Smith et al., 2020, p. 9), while noting that what Smith et al. (2020) termed “post-automation” is already practiced in hackerspaces, makerspaces, fablabs and other public contexts. Among other things, the vision of post-automation emphasizes “democratic deliberation over the technology itself” and “[s]eeing technology as productive commons” (p. 9).

Together with the policy piece complementary to this paper (De Pagter, 2022), we aim to further explore how the ideas behind such democratic deliberation over technology can be established within the current discussions and developments in robotics. We zoom in on a specific case study which arguably presents interesting and relevant material about participatory robotics research conducted in a makerspace. To be precise, the study focuses on a publicly funded research project that is organized around the idea of “democratizing” collaborative robots (or cobots). Cobots are a specific type of robots that have the potential to allow for much stronger human-robot collaborations than before. As part of the studied project, such collaborative industrial robots are being introduced to an Austrian high-tech makerspace. Thanks to its unprecedented safety features, cobot technology has the potential to provide an alternative to the “depopulated vision of Industry 4.0” by promising humans permanent roles in factories as part of “human-robot teams”. Furthermore, cobots reify the compelling vision of a “human-robot symbiosis” (Wilkes et al., 1999), designed to fulfill the seemingly contradictory goals of increasing productivity and flexibility while keeping up worker and customer satisfaction. In this context, the policy piece then provides a short analysis of the European Union’s policy-making efforts concerning the future of robotic automation in view of democratizing robotic technology. As the piece states, those policy-plans currently emphasize the notion of ethical, human-centred, and trustworthy robots. While critically engaging with such high-level principles, the policy piece argues for an approach that engages with democratic futures of robotics through notions of pluralist technological cultures in which speculative attempts towards democratic technologies and practices can be grounded.

Apart from analyzing cobots as the technological artifacts that are central to the studied project, this paper regards the makerspace as being instrumental in the way the concept of democratization unfolds in the case study. We understand makerspaces as shared machine shops in which different digital, manufacturing, biological and other technologies can be used on premise by members of the public (Seravalli, 2012). While typically, makerspaces provide their members with access to additive and subtractive manufacturing technologies (e.g., 3D-printers, laser cutters, etc.), conventional and collaborative industrial robotic arms have only recently started to figure in such locales, with safety concerns and lacking expertise in robotics being important barriers to their appropriation by members of the makerspace communities. While not being a new kind of institution (Smith, 2014), makerspaces have gained popularity in the past 15 years by providing infrastructure and support to their members (Dickel et al., 2019) and other interested individuals and institutions to implement their ideas with the help of the latest manufacturing technologies. Studying the introduction of cobots in a makerspace arguably provides an opportunity to analyze post-automation as a democratic alternative to Industry 4.0 from a micro-perspective. The very location of the makerspace enables public access to and creative usages of the cobot. Our analysis is thereby focused on the production of knowledge and the problems entailed by the implicit notions of human-robot collaboration. Previous research emphasized the tensions and contradictions between the ideal of democratizing technology and “neoliberal business-as-usual” (Braybrooke & Smith, 2018). The aim of this paper is to go beyond the realization that democratic institutional models may be misappropriated by business interests. Instead, we are interested in whether and how different exchanges centered on technologies “in the making” are possible between individual and institutional actors even in “makerspaces defined by institutional encounters” (Braybrooke & Smith, 2018). In this regard, drawing on theory from the fields of science and technology studies (STS) and organizational studies, we conceive of the sociotechnical configuration of the studied project as a trading zone (Galison, 1997; Collins & Evans, 2002).

In the chapters that follow we will first provide a more detailed overview of our case study and methodology. Then, we explore the notion of trading zones in the context of technology democratization. The analysis draws on this nuanced trading zone concept in order to examine how the concept of democratization of technology facilitated the project as well as the subsequent encounters between different kinds of institutions that resulted from it. Based on the analysis of those encounters, we trace two transitions: the first one concerns the transformation of entrenched knowledge and practices pertaining to cobot safety as the technology is appropriated by members of the makerspace in question. The second one pertains to the reconfiguration of the makerspace determined by result-oriented collaborations with research institutes and companies, which entailed the projectification and professionalization of its activities. The conclusion discusses the role of the makerspace in the democratization of cobot technology.

2. Case Study and Methodology

Collaborative industrial robots were initially introduced as devices capable of manipulating heavy work pieces in collaboration with human workers (Colgate et al., 1996). To ensure safety, these devices embodied the principle of passive mechanical support, which—combined with traditional robotic technology—brought about the now stabilized image of an industrial cobot as an anthropomorphic robotic arm endowed with strength and sensitivity. Much like personal computers, today, (personal) cobots can also be ordered, installed, and operated by anyone willing to invest in a handy universal helper. They come with downloadable apps and intuitive “zero-programming” user interfaces. Recently, they also started to figure in makerspaces for various purposes. This prompts questions regarding the safety norms that apply in makerspaces, considering that all possible maker applications—from idea to workspace layout and source code—cannot be known in advance; and, more generally, it brings up questions regarding the required safety measures, when an industrial cobot is operated outside of a factory or a research lab. The certification of cobot applications for industrial use requires the manufacturing companies to present a certification consultant with all the details of an application, including the precise layout of the operational environment, the intended human-robot collaborative operation modes (ISO, 2016; Rosenstrauch & Krüger, 2017), the specifications of the end effector and the objects being manipulated, and the application’s source code (Michalos et al., 2015). Given these specifications, the consultant carries out physical measurements (forces, moments, angles of attack, etc.) based on a series of predefined hazard scenarios considered relevant for the respective application. If the residual risks (i.e., the risks that cannot be eliminated using technical means) of the application are acceptable with respect to the applicable norms, the consultant grants a safety certificate.

The project that we analyze in this paper, is set out to address such issues through “democratization” operationalized by facilitating the crowdsourcing of cobot software and applications by members of a makerspace in cooperation with an interdisciplinary research team. The empirical data were collected using an ethnographic approach (Amann & Hirschauer 1997; LeCompte & Schensul, 1999; Van Maanen, 2011) based on participant observation and interviews with makerspace representatives and other members of the project team, consisting of robotics researchers and cobot safety experts, human-robot interaction (HRI) researchers, industrial robotics engineers and trainers, and makerspace employees (trainers and programmers). We observed two project phases, lasting for about two years, by participating in all project meetings as well as by observing project-related activities and conducting interviews in a makerspace and a factory training center. In the first phase of the project, we focused on the interests of the different partners, converging around the shared goal of “democratizing cobot technology in makerspaces.” Then, in the second phase, we observed how the safety issue was being dealt with by the different actors involved in the project and how the makerspace reconfigured its internal organizational structure to fulfill its duties in the project. During this time, the composition of the project team changed several times, especially on the side of the makerspace.

3. Trading Zones, Makerspaces and the Democratization of Technology

The theory behind the analysis in the context of makerspaces is informed by the concept of the trading zone. The use of this concept implies a strong focus on the exchange of knowledge, expertise, and practices between members of different technical cultures. According to Collins et al. (2007), depending on the configuration of power relations between the participants to the trade, there can be different types of trading zones, which can transition one into the other. In the paragraphs below, we will first provide insights from the literature into the maker culture and its relation to the idea of democratizing technology. After this, we connect those insights to the concept of the trading zone.

3.1 Makers and the democratization of technology

The connotations between the idea of technology democratization and the maker culture are already established. Bijker (1996) proposes the term “democratization of technology”, denoting a form of resistance against established institutions and regimes of knowledge production, in which experts play dominant roles in spite of their “interpretive flexibility” (Bijker et al., 1987). In Bijker’s view, participation and pluralism are determining features of democracy, which can also be transferred to technology in terms of development and use. Smith et al. (2020) note that, although the social construction of technology “is nothing new”, the recent discourses proclaiming a so-called “Fourth Industrial Revolution” driven by “cyber-physical production systems” tend to forget about the historical role of the social component in production systems. What Smith et al. (2020) refer to as “post-automation” seems to draw from Bijker’s “democratization of technology”:

… post-automation is about the subversion of technologies that appear foundational to automation theory, and appropriating them for different social purposes, on less functionalist terms. Post-automation looks to a more open horizon based in democratic and sustainable relations with technology, and that thereby develops socially useful purposes in human-centred not human-excluding ways.” (p. 9)

Tanenbaum et al. (2013) suggest a clear link between the maker community and the democratization of technology, by arguing that “DIY practice is a form of nonviolent resistance: a collection of personal revolts against the hegemonic structures of mass production in the industrialized world” (p. 2609). As Tanenbaum et al. (2013) note, through this form of nonviolent, nonthreatening resistance, the members of the DIY community become themselves “co-designers” and “co-engineers” of technologies normally created by experts in laboratories, scientific institutions, and private organizations by first appropriating them and then contributing to their (further) development in a significant way.

Some authors regard the maker movement as an effect of a so-called “Third Industrial Revolution” (Rifkin, 2011; Troxler, 2013), which is characterized by the shift from a hierarchical towards a so-called lateral distribution of power in the manufacturing domain. This shift is facilitated by state-of-the-art digital communication infrastructures and means of energy production. Fablabs (fabrication laboratories) in which members have access to the newest (often additive or subtractive) manufacturing technologies (e.g., 3D printers, laser cutters, CNC mills, etc.) helped to blur the “labour-capital-divide” and the “white-collar-blue-collar-divide” by facilitating the re-emergence of the “owner-maker” and of the “designer-producer” (Troxler, 2013).

Recent work in peer production studies provides a more nuanced image of makerspaces and their communities. Not only are makerspaces a ‘new old’ kind of institution, having roots in the so-called Technology Networks, which emerged in Britain during the 1980s (Smith, 2014); but instead of embodying the idea of resistance against dominant institutions and regimes of technological innovation, some of them seem to be increasingly defined by ‘institutional encounters’ with organizations and regimes from which they wish to delimit themselves (Braybrooke and Smith, 2018). In the 1980s, technology networks were “community-based workshops [which] shared machine tools, access to technical advice, and prototyping services, and were open for anyone to develop socially useful products” (Smith, 2014, p. 1). Some 20 years later, as Braybrooke & Smiths (2018) note,

[d]epending upon the specific institutional encounter, makerspaces are becoming cradles for entrepreneurship, innovators in education, nodes in open hardware networks, studios for digital artistry, ciphers for social change, prototyping shops for manufacturers, remanufacturing hubs in circular economies, twenty-first century libraries, emblematic anticipations of commons-based, peer-produced post-capitalism, workshops for hacking technology and its politics, laboratories for smart urbanism, galleries for hands-on explorations in material culture, and so on and so on … and not forgetting, of course, spaces for simply having fun.” (p. 10)

Whereas such interpretations provide an interesting look into the role of makerspaces as drivers of innovation, they also bring up the issue of how makerspaces can maintain their autonomy and preserve their role as a more democratic alternative to rule-based, normative institutional models in dealing with traditional organizations (Braybrooke & Smith, 2018). The question is therefore what the term “democratic” actually means in such encounters. The same authors conclude that “[t]he social value in makerspaces lies in their articulation of institutional tensions through practical activity, and in some cases, critical reflexivity” and therefore should not be devalued because they cannot “overturn institutional logics all by themselves” (p. 11).

Other strands of research speak of the diversity of motivations and programs driving the members of the maker community around the world. Lindtner (2015) notes that in China, actors in makerspaces appear to have a political agenda when they engage in doing things in a country-specific way as an ‘antiprogram’ to widespread Western manufacturing technology and culture pervading Chinese factories. By contrast, Davies (2017) argues that in the United States, makers do not seem to have political motives or to compete with traditional manufacturing sites. Instead of subscribing to ideals of democratizing technology and manufacturing techniques, US makers and hackers seem more concerned with being part of a community for purposes of leisure and socialization.

The growing encounters between members of traditional organizations, like companies or research institutes, and members of the maker community determined Dickel et al. (2014) to regard shared machine shops as ‘real-world laboratories’:

These spaces provide niches for experimental learning that expand the scope of established modes of research and development which are predominantly embedded in professional contexts of industry or science. As a specific property, [shared machine shops] have a capacity for inclusion because they provide infrastructures for novel forms of collaboration as well as self-selected participation of heterogeneous actors (in terms of expertise, disciplines, backgrounds etc.) who can join the related endeavours.

As real-life laboratories, shared machine shops are places where people can join collaborative projects, they “might also be places of serendipity, where experts and professionals meet with hobby enthusiasts and DIY innovators and work together on new, unexpected projects” (Dickel et al. 2014, p. 7). Yet, while terms like “real-world laboratories” shed light on the roles shared machine shops play in society, they do not say much about how and why exactly collaborations between different kinds of (un)certified experts come about, unfold, flourish, or fail. By framing such collaborations using actor-network theory, Wolf and Troxler (2015) investigate how knowledge is co-created and shared in open design communities. Other authors have pointed to the existence of a logic of exploitation (Söderberg & Maxigas 2014), which, for example, determines private companies to crowdsource software and hardware by drawing on the availability and expertise of so-called ‘independent developers’ (Drewlani & Seibt, 2018). Drewlani and Seibt (2018) note that the independent developer is a co-creation of the interested company and the co-interested developers (in an actor-oriented sense), who are enrolled in company-controlled development activities.

3.2 Makerspace encounters and the trading zone concept

Complementing this body of work, our focus in this paper is on a rather specific kind of encounter—between robotics and human-machine interaction researchers on one side, and members of the DIY community on the other. While peer production studies have looked at how additive and subtractive manufacturing technologies are being used, little attention has been given to the appropriation of assembly technologies, like industrial robots, which are essential in complex product manufacturing processes. Our perspective also departs from the dichotomous view of makerspaces and other institutions (companies, research institutes, government agencies, etc.) as being entirely distinct entities, which only interface through well-defined protocols of collaboration. Instead, we argue that the makerspace as a particular socio-technical configuration, facilitates the emergence of trading zones between the members of different types of (research) institutions. Makerspaces, including their members and “member-employees,” are thus playing a dual role: of trading partners and facilitators of the trade, while having to fulfill the specific interests that accompany the respective roles.

Galison (1997) coined the term trading zone, representing “[a] site—partly symbolic and partly spatial—at which the local coordination between beliefs and action takes place” (p. 784). Such coordination is made possible through interlanguages (trading languages, pidgins, creoles), which facilitate the communication between different epistemic subcultures (e.g., theoretical and experimental physics) sharing a common goal (e.g., to build a radar system). Drawing on Galison’s work, Collins et al. (2007) note that there can be several types of trading zones, which do not necessarily build on interlanguages alone but also on what Collins & Evans (2002) call “interactional expertise”—expertise that, for example, is sufficient to interact with participants and carry out a sociological study; or boundary objects—“objects which are both plastic enough to adapt to local needs and constraints of the several parties employing them, yet robust enough to maintain a common identity across sites” (Star & Griesemer, 1989, p. 393). Schubert and Kolb (2020) provide an example of a trading zone between social scientists and information system designers, while emphasizing the importance of “symmetry” as “a mode of mutual engagement occurring in an interdisciplinary trading zone, where neither discipline is placed at the service of the other, and nor do disciplinary boundaries dissolve” (p. 1). The trading zone concept has also been applied to exchanges between non-scientific communities (Balducci & Mäntysalo eds., 2013; Gorman ed., 2010). In these examples, the different groups involved in the trade seem to have sufficient epistemic, political or other kinds of authority to act as approximately equal partners in the trade. The balance of power relations between trading partners determines whether a trading zone tends to be collaborative, coercive, or subversive (Galison, 2010; Collins et al., 2007).

Collins et al. (2007) define trading zones “as locations in which communities with a deep problem of communication manage to communicate” (p. 658), while stressing that “if there is no problem of communication there is simply ‘trade’, not a ‘trading zone’” (p. 658). One type of trading zones identified by Collins et al. (2007), which do not rely on inter-languages, are the so-called “boundary object trading zones, which are mediated by material culture largely in the absence of linguistic interchange” (p. 660). Drawing on Collins et al. (2007), we would argue that in the studied project, the cobot performs like a multi-dimensional boundary object, both materially and conceptually. This performance stimulates and justifies exchanges between researchers and members of the makerspace community—as two different “user-developer” groups—and between different kinds of institutions. As Galison (2010) notes, one way to determine whether a particular sociotechnical configuration may be conceived of as a trading zone is to look at what is being traded, by whom, and how power is distributed among the partners of the trade. To provide situated answers to these questions, we will turn to our account of the empirical material where the situated interactions between the different actors involved in the project facilitated the emergence of a trading zone through the shared goal of democratizing cobot technology.

4. Trading robotics knowledge and practices

Drawing on the theoretical framework above, in the following we investigate the makerspace using the lens of the trading zone on three different observations. First, we analyze in general how collaborations between researchers and members of the DIY community are facilitated in makerspaces. After that, we look at a specific deliberation on cobot safety between the members of the project team, whereby we discuss how this notion is being construed and negotiated by the different individual and institutional actors involved in the studied project. Finally, we look at the way in which knowledge about cobot safety and applications is produced in a context previously unforeseen by the creators of the technology.

4.1 Actors’ Hopes and Expectations for the Project

During the project’s preparatory phase, the representatives of the different institutions involved in the project—a robotics research institute, a human-machine interaction group from a university, a factory training center, and a makerspace—emphasized the need for democratizing cobot technology. For the HRI researchers, this implied that the cobot could be made more accessible to a wider range of potential users. These researchers believed that the technical and economic potential of cobots was curtailed by the strict safety norms and standards governing the industrial uses of the technology. In addition to the safety issue, some HRI researchers considered that strictly controlled factory and laboratory environments impose limits on the creativity of application developers. The researchers from the robotics institute on the other hand, were interested in meeting the “maker scene” to gain access to a pool of potential contributors to open-source robotic software. In this sense, these researchers expressed their hopes concerning the organization of “hackatons” and other development competitions. The roboticists were also interested in exploring new ways of ensuring human-cobot interaction safety, which would allow more flexibility and creativity than current industrial safety norms. The representatives of the makerspace, being the providers of such a maker scene, stressed that “this kind of project” was exactly what they were looking for in order to develop their expertise in the domain of robotics. Besides providing its paying members with access to a wide range of industrial tools and machines, the makerspace is part of a holding company, which turned a former factory into a business hub, hosting co-working spaces, company offices, and the respective makerspace. With more than 500 members, many of whom are artists, students, and diverse professionals, one of the makerspace’s roles in this configuration is to draw talent and expertise into its ecosystem. Finally, the representatives of the factory training center expressed their wish for the project to loosen cobot safety certification requirements and reduce costs. Also, they stressed the industry’s need to increase the pool of skilled cobot programmers and operators. In this sense, the makerspace could serve as an additional robotics training site located in a city. The training center organizes a high number of courses with a diverse pool of participants, ranging from teenagers to unemployed persons seeking to engage in reskilling activities.

The way different partners engage with the topic of democratization of technology is best understood in a very practical manner. The concept of democratization is as such a buzzword in the same way that many other buzzwords like “ethical robots”, “responsible innovation”, and “human-centred technology” are. The role of democratization has not been very clear in this project. First and foremost, the notion of democratizing technology does not properly facilitate many explicit discussions on what it means to democratize cobot technology. Nevertheless, even though it is a buzzword that might be deployed in a rather superficial manner, a buzzword can have an effect in the sense that it can help to establish the trading zone that allows the different stakeholders of the project to have encounters in a much more profound manner (Bensaude Vincent, 2014; Ionescu, 2013). The implicit ideas on the concept of democratization have invited and facilitated the encounters between the different actors in the project. Within the context of those encounters, the different actors in the project were able to jointly transform their knowledge and practices towards enabling a different mode of conducting human-robot interaction research in an unconventional cobot usage context. Furthermore, this newly established infrastructure of inquiry also provided room for qualitative methods, such as participant observation, which contrasts the measurement-based assessment methods used in industrial safety certification practices.

4.2 Negotiating Safety at the Boundary

For the HRI researchers on the project team, the implicit idea behind democratization was to design the architecture of the cobot system for the makerspace. This included defining the system’s safety features. The goal was for makerspace members to interact with the cobot safely and directly without application-specific safety certifications or supervision by trainers. With these goals in mind, the project pushed to find a suitable technical solution that would work both for the factory’s training center and the makerspace. Several face-to-face and online meetings were organized. In the following, we discuss some of the recurring themes observed during these meetings. The reporting technique used is that of short critical event vignettes based on field notes, which “depict scenes that were turning points in the researcher’s understanding or that changed the direction of events in the field site” (LeCompte and Schensul, 1999, p. 273).

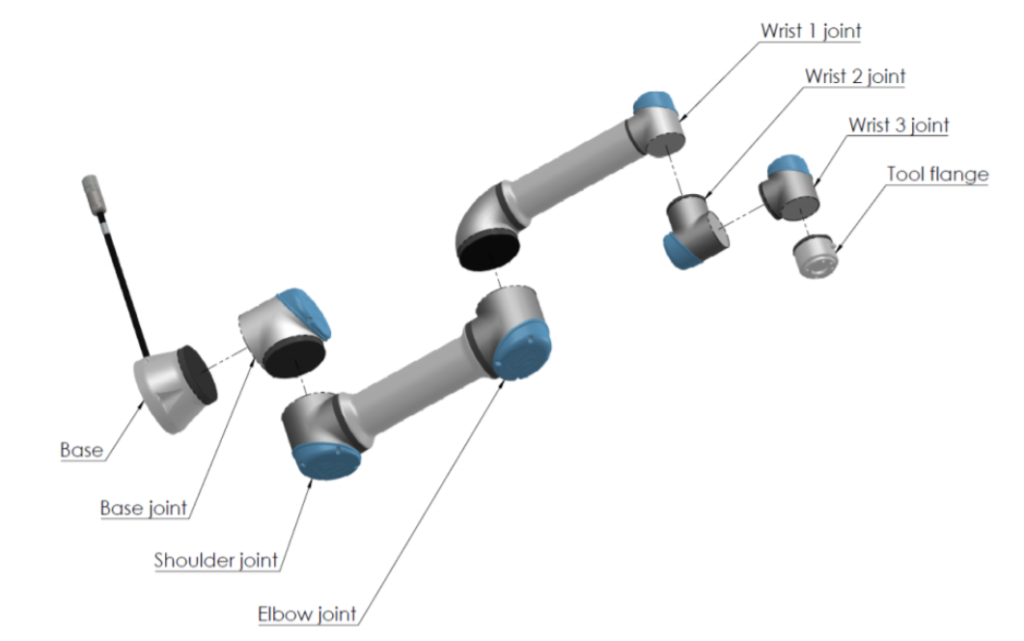

Figure 1: The axes (or joints) of a typical 6-degree of freedom robot.

Vignette 1 – Trading flexibility for safety. In one pivotal meeting, the participants discussed the problems faced by the factory when certifying new cobot applications. Certifications consist of scenario-based risk and hazard analyses conducted by external consultants. The meeting’s focus was on the safety certification of cobot training as an application. As one trainer explained, a surprising result of the certification process was that the range of the end effector of the 6-axis robotic arm used was significantly limited in the horizontal plane in order to minimize the risk of head injuries:

The robot arm is not allowed to reach higher than a few centimeters above the table. [She indicates 10-15 cm with her hand.] This was not thoroughly thought through by the consulting company. One teaches a move at 140 mm and does not see anything.

To enforce this constraint, the consulting company limited the range of the robot’s “elbow,” i.e., the third axis from the base (see Figure 1). This solution puzzled the safety experts from the robotics research institute, who did not understand the purpose of this measure, since “the third axis could be anywhere” and might therefore still pose safety risks. Moreover, as one trainer pointed out, in productive applications, the third axis limitation did not exist because it would make the robot practically useless. As a result, whereas trainees would learn how to use the robot in a reduced functional mode, on the shop floor they would be exposed to another, less restrictive one. The trainers considered this discrepancy unacceptable.

This vignette suggests that cobot safety is contingent on the context of use, which entails different trade-offs between safety and flexibility. In the context-dependent application of the norms by certification consultants, common-sense aspects seem to have less weight in the process than measurements and hazard scenarios—something that was considered problematic by trainers. “Safety” thus seems to be flexibly interpretable by consultants and factory representatives. This insight is important because ensuring safety was one of the main goals of the project proposal. If the very notion of safety was flexibly interpretable, then safety as a goal could not easily be achieved. Through this kind of encounters it became clear how different interpretations of the cobot as well as its potential use were contingent on how safety was going to be negotiated between participants throughout the project.

Vignette 2 – Deferring to training and experience. In a follow-up meeting, the researchers from the robotics institute laid out their plan for a so-called “cobot safety concept” for makerspaces and training centers. In these settings, additional cobot safety features could be incorporated or detached depending on the experience level of the respective user. There would be two working modes—a safe one and a less restrictive, creative one, the latter of which could be used during training sessions. As one safety expert argued, deferring safety to training and experience was motivated by the principle that, “whenever one works with a hazardous technology, one necessarily needs training.” This philosophy was in line with that of the makerspace representative participating to the meeting, who proposed that the safety concept should be accompanied by a “training concept” having several attainable levels:

When a person reaches a certain level, they get an OK from the experts. We need to think about a good training concept. […] After completing all training modules—we’ve done everything we can! Now you have to take care yourself.

As the discussion continued, a more nuanced image of the safety and training concepts emerged. The training modules and levels would be tied to the safety features of the robot. After receiving a basic safety training, makerspace members would be allowed to use the cobot in a power and force-limited mode. An additional training module would qualify users to teach the robot and to have it pick and place 3D printed parts, which do not have sharp edges. A further module would grant the privilege of working with the robot at higher speeds and forces. On another note, one of the robotics experts explained that, in safety certifications, a difference between qualification and competence is made. To attest competence, the number of usage hours and completed projects or simulations, and other kinds of practical experiences with cobots may be taken into consideration. “Whether or not these experiences are successful is not important,” the expert concluded.

This vignette shows how the notion of safety was developed through a new safety concept. It suggests that, in contexts where application-based certifications are not feasible, experts relate their perception of safety to user experience. Most project team members considered the training and experience of users mandatory, even in makerspaces since, in the case of an accident, authorities would scrutinize the ways in which these trainings have been organized and how the different levels of safety have been ensured in relation to the experience of users. As a result, the safest strategy for implementing cobot safety in the makerspace turned out to be similar to the one implemented by the factory’s training center. During these meetings, the safety and training concepts appeared to take shape iteratively and collaboratively in a path-dependent way. The role of “the experts” in defining the safety features of the cobots and the different levels and permission of the training programs was uncontested, which was an important part of the way in which encounters took place at that stage of the project.

Vignette 3 – The responsible user. While the project team worked on defining a new safety and training concept, one member of the team found out that another makerspace had already permanently installed a cobot, which could be used by members at their own will. The safety concept used in that makerspace was based on common sense; or on “a sane human mind,” as one of its representatives explained. Members were only offered a basic safety training, which included indications about which objects the robot was not allowed to manipulate (e.g., knives and other sharp tools). The users were then considered responsible for their own safety as per the general terms of agreement stipulated in their membership contracts. The peer pressure generated by this unexpected turn of events produced a certain shift in power relations between the robotics safety experts and the HRI researchers on the team, the latter of whom were less concerned with the safety issue and more eager to install the cobot in the makerspace as soon as possible. The competing makerspace had brought about—although indirectly—a new image of cobot users as responsible and accountable individuals, capable of taking care of their own safety. Accountability was ensured by having all members sign a liability waiver—a common practice among makerspaces.

This episode illustrates how the responsible user model as an alternative concept challenged the need for an elaborate safety and training concept in the project. In response, the safety experts on the team provided a new set of options concerning the system architecture, which now included a “gradual” safety model. Also motivated by budgetary concerns, one variant of this model foresaw the acquisition of a basic cobot without any additional safety features, provided that it was operated very slowly, with hardcoded speed limits ensuring safety. As more experience would be gained by observing how members interacted with the cobot, the safety experts hoped that a better-informed decision could be made concerning the additional safety systems required. However, the makerspace was to “seriously consider how much residual risk they were willing to accept,” the roboticists insisted.

The sequence of the three vignettes together illustrates how the members of different technical cultures jointly sketched out a new cobot safety concept for makerspaces—a new context of use for this technology. In this process, the roboticists and industrial engineers set out from a common understanding based on the relevant industrial safety norms, with which they were familiar. Yet, as the discussion progressed, it became clear that the application of these norms in non-productive settings, such as the training center, led to impractical solutions, with which all actors were unsatisfied. Simultaneously, the image of the projected user (Akrich, 1992) started to shift across the three vignettes in fundamental ways.[1] While in factories, safety norms configure human-robot interactions, some makerspaces trust their members to be responsible and accountable individuals, who can work with “hazardous technologies” without extensive training. By configuring the responsibility of the projected user on a spectrum from total dependency on norms (i.e., factory workers for the safety of whom others are responsible) to autonomy (i.e., makers and hackers responsible for their own safety and accountable for any consequences), the actors seemed to have found a fruitful ground for negotiation, which allowed them to further transform their knowledge. The trainable, configurable, and responsible user played an essential role in articulating the link between the safety concept and the training concept. The flexible, module-based training concept proposed by the roboticists helped to relate the training methods and practices used in the factory’s training center with those used in the makerspace.

The empirical material also suggests that negotiating safety at the boundary between different institutions and technical cultures represents one of the practical means of democratic deliberation that were used during the project. Facilitated by the cobot as an articulated boundary object—comprising a safety concept, a training concept, and the flexible interpretation of safety—the members of the project team managed to transform their knowledge in ways that would not have been possible if they stayed within the frame of mind of their own institutions and technical cultures. This particular transition was made obvious when the responsible user model was blended with the safety measure of simply slowing down the robot to produce a viable preliminary cobot system that was “safe enough” for work in the project and the makerspace to continue.

4.3 Let’s Agree to Disagree

In addition to participant observation, we conducted interviews with the trainers from the factory training center and those from the makerspace. Concerning the safety issue, the questions clustered around the topics of how training courses are organized, how safety is being addressed in the training sessions, and what potential hazards the trainers see in the trainees’ interactions with cobots. The following interview extracts illustrate several of the differences in the language and practices used by researchers and industrial engineers on the one side, and cobot trainers from the makerspace on the other. For example, to the question of what could potentially go wrong during training, one trainer from the factory training center responded:

So, there can be unforeseen movements; therefore, each step [of the robot] towards the next waypoint is being tested before the entire program runs because there are some [robot] motions, where—and this happened a few times during workshops—the robot chooses a completely different way as one would expect.

The problem of unforeseen motion paths is pointed out by the trainer as one potential safety issues occurring during workshops. Unforeseen pathways between predefined waypoints occur because the so-called inverse kinematics algorithms, which compute the six robot joint angles for any given target pose and then rotate the elements of the robot arm until that target pose is reached. This issue is treated differently by the makerspace trainers. The following discussion illustrates this contrast:

Interviewer: So with MoveJ [joint based movement, as explained before] or which kind of movement?

Trainer: Definitely not linear. I think it must have been MoveJ. For sure.

Interviewer: And this happens also when you are running the simulation on the teach pendant first, or…

Trainer: This, funnily, we don’t do.

Interviewer: Ok.

Trainer: We do not watch the simulation [of the movement].

Interviewer: … pause … Me neither!

Trainer: Ok. [Laughing together]

One way to avoid unexpected robot pathways is to first simulate them using the robot’s software. Yet, in the makerspace using the simulation to preview the robot’s movements during trainings does not appear to be a common practice. Although intrigued by this answer, we tried to avoid being normative by admitting that simulation is not always necessary. The next excerpt illustrates how unforeseen movements are being perceived by trainer and trainees and how the latter go about explaining what happens behind the scenes:

Interviewer: Is this [the unexpected movement] something that disturbs the workshops or is it funny when it happens? What is the effect?

Trainer: The effect is mostly “oh, I did not reckon with that.” [The trainees] are surprised but they are not scared. […]

Interviewer: And if they ask „what just happened?”—How do you explain that?

Trainer: We try to find out together, to remove [the problem]. I think I never spoke in a workshop about inverse kinematics. I try to avoid that because, to be honest, I am not knowledgeable enough myself to properly explain that. What I do explain is that, during the different movements—that is, MoveJ—the robot uses the axes in such a way that it is most effect for itself. And in the case of a linear movement, it goes from point to point in a line, which is not the case with MoveJ. So this is my explanation for the two movement types.

In this excerpt, the makerspace trainer seems to argue in favor of providing non-expert explanations for unexpected robot movements, while invoking her lack of knowledge concerning inverse kinematics. In these situations, the trainer seeks ways to legitimize simplified explanations over robotics terminology in an attempt to distill the necessary practical knowledge from a theory that is inaccessible to non-experts. Nevertheless, the trainer adopts the technical term ‘MoveJ’, which we had dropped earlier in the discussion, thus showing how the language exchange takes place. To the question of whether one would gain something by talking about inverse kinematics during the workshops, the makerspace trainer responded:

I don’t think so. I think, to be honest, that inverse kinematics is only relevant when one is really interested in robotics, that is, when one wants to go deeper. But for programming, if one is taught how—and this pertains to intuition—to learn something not through reading but through “doing” and to understand and for that there are possibilities in the makerspace; and everyone who works with [the robot] knows it that every step that I program must be tried out and not with 100% speed. And I think that this way, one gets a tremendous feeling about what is possible and what is not.

The trainer stresses that speaking about this problem using robotics terminology is neither necessary nor desirable. Then, she goes on and sketches the profile of a projected cobot user, who is likely to be encountered in the makerspace. The responsible cobot user is as such becoming defined as a pragmatic individual, who is well-aware of the safety hazards entailed by working with the cobot while nonetheless being more interested in programming the cobot than in learning about its internals. This type of user is expected to benefit from the resources of the makerspace (interested peers, more experienced trainers, other workshops, etc.) to learn about safety and other issues. The makerspace is thereby stipulated as a source of practical knowledge and possibilities—one only needs to ask and come up with ideas, whereby the role of reading is superseded by that of “doing.” Together with the image of the pragmatic, responsible cobot user, downplaying the importance of robotics theory and terminology may be regarded as an act of resistance to well-established forms of knowledge, learning, and acting that are characteristic for traditional institutions; a resistance articulated around knowledge gained through and (re-)invested in practice rather than theory; and a form of resistance through doing that keeps the power relations between makers and researchers balanced and the principle of symmetry upright. In the next chapter we will explicate however that the makerspace in question itself becomes reconfigured throughout the course of the project.

5. Reconfiguration

In the second part of our analysis we trace how the studied makerspace reconfigured under the influence of other institutional models. By facilitating a trading zone in which the makerspace becomes accessible to research institutes and companies, its activities are increasingly projectified and a process of professionalization is being pursued. We analyze this by going through three different roles of the makerspace, namely: the makerspace as the provider of infrastructures behind this trade, the makerspace as a party with specific interests in the project leading to particular expectations concerning the project’s outcome, and finally the makerspace as a trading partner in exchanges that are supposed to help in the co-construction of cobot technology.

5.1 Infrastructures and the trading of epistemic goods

Within the sociotechnical configuration of the studied project, the notion of democratization of technology allowed for pivotal agreements on an institutional level, between the different types of organizations involved in the project. By offering the infrastructure and a pool of participants, the makerspace received expertise in the domain of robotics and HRI from two research institutes and an industrial training center. The training center exchanged their safety requirements and expertise gained through expensive certification processes for a new safety concept and the promise of access to a new training infrastructure. The makerspace thus positioned itself as a facilitator of the trade between different kinds of institutions, while taking part in the trade itself as well. Within this context of facilitation, the main form of exchange occurred at the conceptual level between the researchers and engineers involved in the project. United by the common goal of democratizing cobot technology for different purposes, they transformed their knowledge about this kind of industrial robot. When used in an industrial context, the cobot seemed to convey the image of a pre-programmed machine, which configures worker routines. By contrast, in the makerspace it was perceived more like a computer that moves, which allows its users to explore its capabilities in a playful manner. The news about another makerspace having already permanently installed a cobot affected the project team’s preliminary safety concept, which was built upon an elaborate training program combined with very specific active safety systems. These “epistemic moments” prompted an inversion in the perception of the user-cobot relation, with users becoming interacting subjects rather than objects of inquiry for researchers and engineers.

It is through these moments that a form of epistemic trade appears more evidently to be at work. The goods of this trade are epistemic in nature because they challenge the entrenched beliefs, practices, and knowledge of the participants in unexpected ways. The “epistemic goods” being traded between the members of different technical cultures seem to be of little value within one’s own culture—perhaps because they are considered less important than other objects and thus play secondary roles in knowledge production processes. In this regard, the present case study suggests that, to foster sustainable exchanges between researchers and makerspace members as well as between members possessing different kinds and levels of expertise, trade must be fair in the sense that the contributions of all the parties involved should be balanced and equitable. Mutual respect for each other’s expertise is required of both researchers and participants. Through mutual respect, hierarchical boundaries induced by the members’ diverse educational backgrounds can be blurred. And, the benefits of the trade must be shared one way or another with the members of the other culture, to whom the receiving parties remain indebted.

5.2 Interests and the alignment of expectations

Whereas the makerspace can be seen as an important facilitator of the trading zone as is argued above, balancing power and negotiating different interests among the actors involved in the trade was a fragile endeavor. Furthermore, a difficult financial situation urged the makerspace to seek more aggressive ways to capitalize on the outcomes of the project. Crucial here is the fact that the makerspace in question is also an institution which, shortly after its inauguration, has provided creative refuge primarily to the members of the local community of artists and to members of the public. Roughly three years later however, it is seeking to professionalize its staff and organizational culture. This is perhaps also an effect of the Covid-crisis, which made high-tech makerspaces even more dependent on public funding than before. At the same time, some of the early members of the makerspace saw an opportunity to capitalize on their own creativity. Especially when public funding is used, there is hope for another mode of working, in which people get paid for their creative work without having to protect and commercialize using instruments like patents and startups. This reconfiguration led to misalignments of expectations, thereby delegitimizing gig-based trade between the makerspace and its members. The following episode provides an example of misalignment, reflecting the makerspace’s reconfiguration of attitude towards is members-employees.

The makerspace contracted one of its members to find novel potential collaborative robot applications. The member’s freedom was thus constrained to some extent by the requirement to produce a result in line with the goals of the project. At the same time, there were no restrictions as to how, when, and where such explorations should happen. After some time, the assessment of this “uncertified expert” was that, with the exception of a stop-motion application (i.e., using the robot to film or photograph plants and other (living) things over long periods), cobots could only do what other specialized machines, like 3D printers or circuit board assembly machines, already did better. Instead of a collection of applications, the programmer presented a new robot software, which he created outside the allotted contingent of hours for which he was being paid. The idea, so he told us, came during a long train ride. He integrated an inverse kinematics library for the UR5 cobot into an existing 3D simulation and programming environment, in which different kinds of curves could be drawn by a user and followed by the robot, both in the simulation and reality. Whereas the idea was not entirely new, the way in which it was implemented was highly interesting and innovative. Some of the researchers involved in the project regarded this outcome as a fulfillment of the hopes and expectations with respect to open innovation in makerspaces. The programmer—one of the makerspace’s “hackers”—proved that, within a few weeks, a single creative and skilled individual can achieve results that other institutions would pursue using much greater investments and bureaucratic overhead. Yet, to the disenchantment of the entire project team, the hacker was not willing to publish the source code without what he considered a fair remuneration for his efforts. This was frustrating for some members of the project team, since the exchange of ideas that preceded the development of this software also contributed to its design.

5.3 Exchanges and the co-construction of technology

The abovementioned reconfiguration of the makerspace also signals another shift: the makerspace appears to have transitioned from one trading mode to another. In that regard, the trading zone model complements that of makerspaces as “real-life laboratories” (Dickel et al., 2014) by suggesting that the collaboration between techno-scientists and lay or “uncertified experts” (Collins & Evans, 2002), and between research institutes and makerspaces are inherent to the co-construction of technologies and their users (Oudshoorn & Pinch, 2003) as a process of transition towards a potentially sustainable mode of knowledge production rather than a controlled experiment. The “problem of communication,” which Collins et al. (2007) require to justify the use of the trading zone concept lies in the distinct languages used by the researchers and the makerspace members. This problem was overcome when a trading language emerged, which facilitated the communication between makerspace members and researchers, while allowing the members of each culture to pursue their own interests. The resulting sociotechnical configuration of the project resembled a collaborative, heterogeneous trading zone, which produced a new image of the responsible cobot user as well as a novel robotics software environment that fit the spirit and the various interests of makerspace members. As discussed in the previous section, the makerspace first contracted an existing member, who was skilled in programming, to experiment with the cobot and thus finding novel potential collaborative robot applications. However, after a period of evaluation and negotiation, the makerspace management decided not to acquire the rights for the newly developed tool, to terminate the contract with this programmer, and to hire a trained roboticist to work on the project.

By hiring a professional robotics engineer to conduct trainings and foster the creation of new cobot applications by regular members, the trading language also disappeared as the researchers suddenly found themselves on the same page with the makerspace representatives. That is to say, the makerspace seized the opportunity to absorb some of the expertise of the researchers from the robotics institute by creating the premises for transitioning towards a homogeneous trading zone. In exchange, the robotics institute gained access to a new sociotechnical infrastructure, with the help of which entrenched industrial safety norms and standards could eventually be rendered more permissive or fulfilled in other ways. This suggests that introducing a new technology in makerspaces may cause some degree of institutional isomorphism (DiMaggio & Powell, 1983) through professionalization and normativity. Whereas in the beginning, the project attracted the members of very different technical cultures, thus facilitating the emergence of a heterogeneous, fractioned trading zone around the cobot as a boundary object, almost two years later, a shift toward what Collins et al. (2007) call a “homogeneous” trading zone could be observed, in which the trading partners shared a robotics interlanguage that included notions that were specific to the use of robots in makerspaces; such as, ‘member applications’ in addition to productive applications, ‘responsible users’ and ‘safety concept’ in addition to certified human-robot collaboration / coexistence / interaction, ‘flexibilities’ instead of restrictions, etc.). With professionalization and the introduction of new hierarchical levels and norms of conduct, the makerspace is therefore starting to position itself in Austria as a professional institution, which can quickly adapt to new research topics and forms.

6. Conclusion

Through our analysis of a project focused on the democratization of an industrial technology, we have traced how collaborative robots have crossed the boundary of the factory into the open world. While being anticipated in European policy-making, such crossings play out in unexpected ways. One of the observable effects of the installation of cobots in the studied makerspace was that these robots accelerated the transformation of the makerspace into a technological platform for companies. This reconfiguration was rendered possible through professionalization and business-orientation. This underlines the speculative dimension of robotics and automation technologies, which bear many future unknowns. Moreover and unsurprisingly, there are also many fears and expectations around the future of automation. In this context it is crucial that notions such as that of post-automation that stress the need for and chart the potential of more democratic futures around automation are taken seriously. It is equally important for the promoters of democratic alternatives to the Industry 4.0 vision to gain access to infrastructures that make it possible for them to engage with novel technologies and the sociotechnical futures associated with them. The peer production community in general, and the makerspace model in particular bear great potential when it comes to the (further) development of such infrastructures. On the other hand, however, as the paper has argued, such infrastructures reconfigure in unexpected ways. Analyzing whether such reconfigurations have indeed led to some form of democratization remains an open question. However, something that has been achieved already is the very use and engagement with such concepts, albeit mostly implicit.

When it comes to the analysis of the encounters that are facilitated in the makerspace, our observations suggest that the exchanges and interactions between the members of different technical cultures produced new insights in terms of cobot safety and human-robot interactions. Makerspaces can play multiple institutional roles at once, for example, by being open to the wider public while hosting technologies and members with a level of expertise comparable to that of ‘certified’ institutions and experts. Such relationships between makerspaces and other institutions thus appear to be interwoven to such an extent, that a clear distinction between them is no longer possible beyond the observation that makerspaces are, in principle, open to interested members of the public, while other institutions generally are not. When crossing the boundary between industrial research settings and makerspaces, relatively stable models are transformed more profoundly than through exchanges between researchers and engineers alone. In this context, the trading zone model provided an analytical tool for tracing encounters, exchanges, and transformations of and between the members of different technical cultures. This model complements that of real-world laboratories by emphasizing interaction modes and processes rather than the actors’ intentions.

Acknowledgements

We would like to thank the two anonymous reviewers for their insightful comments and feedback. A special thanks goes to the editors of this special issue, Mathieu O’Neil and Panayotis Antoniadis, for their prodigious editorial efforts and support. This research has received funding from the Austrian Research Promotion Agency through the “Cobot Meets Makerspace” (CoMeMak) project number 871459.

End notes

[1] Akrich coined the term “projected users” (as opposed to the actual users of a technology) referring to those user images (or profiles) for which inventors and designers conceive technologies.

References

Akrich, M., 1992. The de-scription of technical objects. In Wiebe E. Bijker and John Law (eds.). Shaping technology/building society. MIT Press, Cambridge, MA, 205-240.

Amann, K. and Hirschauer, S., 1997. Die Befremdung der eigenen Kultur. Ein Programm. Die Befremdung der eigenen Kultur. Zur ethnographischen Herausforderung soziologischer Empirie. Suhrkamp, Frankfurt am Main.

Balducci, A. and Mäntysalo, R. (eds.), 2013. Urban planning as a trading zone. Springer, Dordrecht, NL.

Bensaude Vincent, B., 2014. ‘The Politics of Buzzwords at the Interface of Technoscience, Market and Society: The Case of ‘Public Engagement in Science’’, Public Understanding of Science, 23(3), pp.238–53.

Bijker, W.E., 1996. Democratization of technology, who are the experts. Retrieved November, 3, p.2009. Available at: http://www.angelfire.com/la/esst/bijker.html

Bijker, W.E., Hughes, T.P. and Pinch, T.J. eds., 1987. The social construction of technological systems: New directions in the sociology and history of technology. MIT press.

Braybrooke, K. and Smith, A. 2018. ‘Liberatory Technologies for Whom? Exploring a New Generation of Makerspaces Defined by Institutional Encounters. Journal of Peer Production 12, 3 (2018).

Colgate, J.E., Edward, J., Peshkin, M.A. and Wannasuphoprasit, W., 1996. Cobots: Robots for collaboration with human operators. Proceedings of the ASME Dynamic Systems and Control Division, DSC-Vol. 58 (1996), 433–440.

Collins, H., Evans, R. and Gorman, M., 2007. Trading zones and interactional expertise. Studies in History and Philosophy of Science Part A, 38(4), pp.657-666.

Collins, H.M. and Evans, R., 2002. The third wave of science studies: Studies of expertise and experience. Social studies of science, 32(2), pp.235-296.

Corbin, J. and Strauss, A., 2014. Basics of qualitative research: Techniques and procedures for developing grounded theory (3rd ed). SAGE Publications, Los Angeles.

Davies, S., 2017. ‘Characterizing Hacking: Mundane Engagement in US Hacker and Makerspaces’. Science, Technology, & Human Values, 43(2), 171-197.

Dickel, S., Ferdinand, J.P. and Petschow, U., 2014. Shared machine shops as real-life laboratories. Journal of Peer Production 5, (2014), 1-9.

Dickel, S., Schneider, C., Thiem, C., and Wenten, K. A. 2019. ‘Engineering Publics: The Different Modes of Civic Technoscience.’ Science & Technology Studies 32, 4, 8-23.

DiMaggio, P.J. and Powell, W.W., 1983. The iron cage revisited: Institutional isomorphism and collective rationality in organizational fields. American sociological review, pp.147-160.

Drewlani, T. and Seibt, D., 2018. Configuring the Independent Developer. Journal of Peer Production 12, (2018), 96-114.

Galison, P., 1997. Image and logic: A material culture of microphysics. University of Chicago Press, Chicago.

Galison, P., 2010. Trading with the enemy. In Gorman ME (ed.) Trading Zones and Interactional Expertise. Creating New Kinds of Collaboration. MIT Press, Cambridge, MA.

Gorman, M.E. (ed.), (2010). Trading zones and interactional expertise: Creating new kinds of collaboration. MIT Press, Cambridge, MA.

Kagermann, H., Johannes H., Hellinger, A. and Wahlster, W. (eds.), 2013. Umsetzungsempfehlungen für das Zukunftsprojekt Industrie 4.0: Deutschlands Zukunft als Produktionsstandort sichern; Abschlussbericht des Arbeitskreises Industrie 4.0, Forschungsunion, Frankfurt a. M.

ISO/TS 15066:2016 – Robots and robotic devices – Collaborative robots. ISO International Organization for Standardization. P.O. Box 56 – CH-1211 Geneva 20 – Switzerland.

Lindtner, S., 2015. ‘Hacking with Chinese Characteristics: The Promises of the Maker Movement against China’s Manufacturing Culture’. Science, Technology, & Human Values, 40(5), 854-879.

LeCompte, M.D. and Schensul, J.J., 1999. Analyzing & interpreting ethnographic data. AltaMira Press, Lanham, MD.

Michalos, G., Makris, S., Tsarouchi, P., Guasch, T., Kontovrakis, D. and Chryssolouris, G., 2015. Design considerations for safe human-robot collaborative workplaces. Procedia CIrP, 37, pp. 248-253.

Oudshoorn, N. and Pinch, T. (eds.), 2003. How users matter: The co-construction of users and technologies. MIT press, Cambridge, MA.

Rifkin, J., 2011. The Third Industrial Revolution. How Lateral Power is Transforming Energy, the Economy, and the World. New York, Palgrave Macmillan.

Rosenstrauch, M.J. and Krüger, J., 2017. Safe human-robot-collaboration-introduction and experiment using ISO/TS 15066. In 3rd International Conference on Control, Automation and Robotics (ICCAR). IEEE Press, Piscataway, NJ, USA, 740-744.

Schubert, C. and Kolb, A., 2020. Designing Technology, Developing Theory: Toward a Symmetrical Approach. Science, Technology, & Human Values. https://doi.org/10.1177/0162243920941581

Seravalli, A., 2012. Infrastructuring for opening production, from participatory design to participatory making?. In Proceedings of the 12th Participatory Design Conference: Exploratory Papers, Workshop Descriptions, Industry Cases-Volume 2 (pp. 53-56).

Smith, A. 2014, ‘Technology networks for socially useful production,’ Journal of Peer Production 5.

Smith, A., Fressoli, M., Galdos Frisancho, M. and Moreira, A., 2020. Post-automation: Report from an international workshop. University of Sussex.

Söderberg, J. & Maxigas (ed.) 2014. Book of Peer Production. Aarhus, Aarhus Universitetsförlag A/S.

Star, S.L. and Griesemer, J.R., 1989. Institutional ecology,translations’ and boundary objects: Amateurs and professionals in Berkeley’s Museum of Vertebrate Zoology, 1907-39. Social studies of science, 19(3), pp.387-420.

Strübing, J., 2014. Grounded Theory: zur sozialtheoretischen und epistemologischen Fundierung eines pragmatistischen Forschungsstils (3. Auflage). Springer VS, Wiesbaden.

Tanenbaum, J.G., Williams, A.M., Desjardins, A. and Tanenbaum, K., 2013, April. Democratizing technology: pleasure, utility and expressiveness in DIY and maker practice. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 2603-2612).

Troxler, P., 2013. Making the 3rd industrial revolution. FabLabs: Of machines, makers and inventors, Transcript Publishers, Bielefeld.

Van Maanen, J., 2011. Tales of the Field: On Writing Ethnography. 2nd ed. Chicago, University of Chicago Press.

Wilkes, D.M., Alford, A., Cambron, M.E., Rogers, T.E., Peters, R.A. and Kawamura, K., 1999. Designing for human-robot symbiosis. Industrial Robot 26, pp. 49–58.

Wolf, P., & Troxler, P., 2015. ‘Look Who’s Acting! Applying Actor Network Theory for Studying Knowledge Sharing in a Co-Design Project’. International Journal of Actor-Network Theory and Technological Innovation, 7(3), 15-33.

Woodcock, J. and Graham, M., 2019. The gig economy: a critical introduction. Polity.

Woolgar, S., 1990. Configuring the user: the case of usability trials. The Sociological Review 38, 1_suppl (1990), pp. 58-99.